In today’s IT environments, organizations continue to manage an ever-growing quantity of systems. This requires organizations to depend more on automation to perform tasks. Deploying and managing an operating system like Red Hat Enterprise Linux (RHEL) can be time-consuming without automation, with administration and maintenance tasks taking significantly longer to complete.

What are RHEL system roles?

RHEL system roles are a collection of Ansible roles that not only help automate the management and configuration of RHEL systems, they also can help provide consistent and repeatable configuration, reduce technical burdens and streamline administration. In this post, we’ll show you how to get started with RHEL system roles.

RHEL system roles overview

Administrators can select from a library of common services and configuration tasks provided by RHEL system roles. This interface enables managing system configurations across multiple versions of RHEL running on physical, virtual, private cloud and public cloud environments.

RHEL system roles are supported with your RHEL subscription and are packaged as RPMs included in RHEL. However, if you have a Red Hat Ansible Automation Platform subscription, you can also access RHEL system roles from the Ansible automation hub for use in the Ansible Automation Platform. Likewise, if you have a Red Hat Smart Management subscription and utilize Red Hat Satellite, you can use RHEL system roles from Satellite.

Editor’s note - As of April 1, 2023, Red Hat Smart Management is now called Red Hat Satellite. Product pricing, content and services have not changed. For more information, visit the Red Hat Satellite product page or contact satellite@redhat.com.

You will find a wide variety of RHEL system roles.

Security-related roles:

- selinux allows for configuration of SELinux

- certificate can manage TLS/SSL certificate issuance and renewal.

- tlog configures session recording (video)

- nbde_client and nbde_server configure network bound disk encryption (video)

- ssh and sshd configure the SSH client and server, respectively (video)

- crypto_policies configures the system-wide cryptographic policies (video)

- vpn configures virtual private networks

- firewall configures the firewall

Configuration-related roles:

- timesync configures time synchronization

- network configures networking

- kdump configures the kernel crash dump

- storage configures local storage

- kernel_settings configure kernel settings

- metrics configures system metrics (using Performance Co-Pilot and Grafana, video)

- logging configures logging (rsyslog)

- postfix configures the postfix email server

- ha_cluster configures high availability clustering

- cockpit configures the RHEL web console

Workload-related roles:

- SAP related system roles to assist with implementing the SAP workload on RHEL

- sap_general_preconfigure for SAP general preconfiguration

- sap_netweaver_preconfigure for SAP NetWeaver preconfiguration

- sap_hana_preconfigure for SAP HANA preconfiguration

- sap_hana_install for SAP HANA installation (in technology preview)

- sap_ha_install_hana_hsr to set up HANA system replication (in technology preview)

- sap_ha_install_pacemaker to set up RHEL HA pacemaker cluster (in technology preview)

- sap_ha_prepare_pacemaker for RHEL HA cluster preconfiguration (in technology preview)

- sap_ha_set_hana to set up RHEL HA solutions for SAP HANA (in technology preview)

- Microsoft SQL Server system role can automate the installation, configuration, and tuning of Microsoft SQL Server on RHEL. In RHEL 8.7 and 9.1, this role also added support for setting up Microsoft SQL Server Always On availability groups.

For an up-to-date list of available roles, as well as a support matrix that details which versions of RHEL are supported by each role, refer to this page.

Control node

RHEL system roles utilize Ansible, which has a concept of a control node. The control node is where Ansible and the RHEL system roles are installed. The control node needs to have connectivity over SSH to each of the hosts that will be managed with RHEL system roles (which are referred to as managed nodes). The managed nodes do not need to have the RHEL system roles or Ansible installed on them.

- Control node: The system with Ansible and the RHEL system roles installed.

- Managed nodes: The systems being managed by RHEL system roles.

There are several options for what can be used as the control node for RHEL system roles: Ansible Automation Platform, Red Hat Satellite, or a RHEL host.

If you have an Ansible Automation Platform subscription, it is recommended to use the Ansible Automation Controller as the control node. Automation Controller offers advanced features such as a visual dashboard, job scheduling, notifications, workflows, advanced inventory management, etc. For more information on Ansible Automation Platform, refer to this site.

For people utilizing Red Hat Satellite, it is also possible to use Satellite as the control node. Please refer to this previous post in which I cover an overview of how to set this up.

You can also utilize a RHEL host as the control node. RHEL 9 and RHEL 8 include Ansible Core and RHEL system roles in the AppStream repository. The RHEL system roles and Ansible Core packages can be installed on RHEL 9 or RHEL 8 with the following command:

# dnf install rhel-system-roles ansible-core

For more information, please refer to the scope of support for the Ansible Core package included in RHEL .

In the example shown in this post, I’ll be using a RHEL 9 host as the control node.

SSH configuration

The control node needs to have SSH access to each of the managed hosts. If you have firewalls on your network, this might involve ensuring that port 22 is open between the control node and each of the managed hosts.

In addition, the control node will need to be able to authenticate over SSH to each managed node and escalate privileges to the root account.

If you are utilizing Ansible Automation Controller as your control node, you probably already have this set up as this is a basic prerequisite to run a playbook on hosts.

If you are utilizing Red Hat Satellite as your control node, it utilizes the remote execution configuration to connect and authenticate to hosts. If you don’t already have remote execution configured in your Satellite environment, refer to the Satellite documentation on how to set this up.

If you are utilizing a RHEL control node, you will need to:

- Determine which account you would like to use on the control node and managed hosts.

- While it’s possible to use the root account, it is generally recommended to create and utilize a service account.

- Generate an SSH key for this user on the control node with the ssh-keygen command.

- Distribute the public key to each of the managed hosts (which the ssh-copy-id command can help with).

- If you are using a service account, you’ll need to configure sudo access on each managed node so that the service account can escalate its privileges to root.

After the Ansible inventory is set up, the Ansible ping module can validate that this SSH configuration was set up correctly (this will be covered later in the post).

Inventory file

Ansible needs to be provided a list of managed nodes that it should run the RHEL system roles on. This is done via an Ansible inventory file.

If you are utilizing Ansible Automation Controller as your control node, there are a wide variety of options for inventory. For more information, refer to the documentation.

For people using Satellite as the control node, you can assign Ansible roles to either individual hosts or to groups of hosts via host groups. For more information refer to the Satellite documentation.

If you are utilizing a RHEL control node, you’ll need to define an inventory in a text file.

The simplest inventory file would be one that simply lists one host per line in the file, as shown in this example:

$ cat inventory rhel9-server1 rhel9-server2 rhel8-server1 rhel8-server2

It is also possible to define groups in the inventory file as in the following example where the prod and dev groups are defined:

$ cat inventory [prod] rhel9-server1 rhel8-server1 [dev] rhel9-server2 rhel8-server2

These previous two examples were with INI formatted inventories. It is also possible to define inventories in YAML format. The following example defines the same prod and dev groups, but in a YAML formatted inventory:

$cat inventory.yml

all:

children:

prod:

hosts:

rhel9-server1:

rhel8-server1:

dev:

hosts:

rhel9-server2:

rhel8-server2:

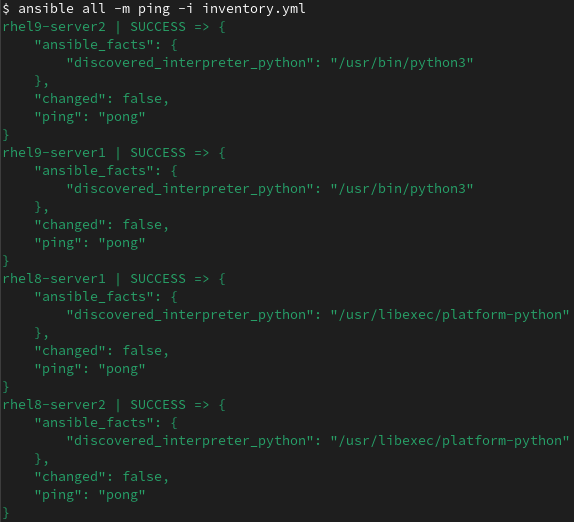

Now that we’ve defined an inventory file, we can utilize the Ansible ping module to validate that our SSH configuration was set up correctly and that our control node can communicate with and connect to each managed host. The command in the following example tells Ansible to use the ping module, the inventory file named inventory.yml, and to connect to all of the hosts defined in the inventory:

In this example, Ansible successfully connected to all four hosts I had defined in the inventory file.

You can also define variables in the inventory, however, we’ll cover that later when we talk about variables.

Ansible inventories are very powerful and flexible, and I’ve just covered the basics. For more information on Ansible inventory files, refer to the Ansible documentation.

Role variables

Ansible variables allow us to specify our desired configuration to the RHEL system roles. For example, if we are using the timesync role to set up time synchronization, we need the ability to tell the timesync role which NTP servers in our environment should be utilized by our managed nodes.

Each role has a documented list of role variables under its README.md file which is accessible at /usr/share/doc/rhel-system-roles/<role_name>/README.md.

For example, the timesync role uses the timesync_ntp_servers variable to specify which NTP servers should be used. There are also additional variables for the timesync role that are documented in the README.md file, such as timesync_ntp_provider, timesync_min_sources, etc.

Ansible variables are another powerful feature of Ansible, and again, I’ve only covered the basics here. For more information, refer to the Ansible documentation.

If you are using Satellite as your RHEL system roles control node, refer to this blog post for information and examples on how to define the role variables in Satellite.

There are two main locations where the Ansible variables can be specified: in the inventory, or directly in the playbooks. I’ll cover these two options in the next sections.

Defining role variables directly in playbook

The role variables can be specified directly in the playbook that calls the RHEL system role. For example, the playbook below defines the timesync_ntp_servers variable to specify 3 NTP servers and also specifies that they should utilize the iburst option.

$ cat timesync.yml

- hosts: all

become: true

vars:

timesync_ntp_servers:

- hostname: ntp1.example.com

iburst: yes

- hostname: ntp2.example.com

iburst: yes

- hostname: ntp3.example.com

iburst: yes

roles:

- redhat.rhel_system_roles.timesync

We could run this playbook with the ansible-playbook command, specifying the playbook file name and our previously created inventory file:

$ ansible-playbook timesync.yml -i inventory.yml

While defining the role variables directly in the playbook file is easy to do and convenient, it will require you to edit the playbook every time the role variables need to be updated (for example, if your NTP server was replaced and you needed to update all of the hosts to utilize the new NTP server). It is considered a better practice to define the role variables outside of the playbook so that the playbook doesn’t have to be frequently edited and updated.

Defining variables in the inventory

It is also possible to define the role variables in the Ansible inventory rather than in the playbook. As previously mentioned, this will avoid the need to frequently edit the playbook itself.

By defining the variables in the inventory, we can also easily define variables based on the inventory groups.

In this example, I’d like to use the timesync system role to configure time synchronization on my servers, and I would like to define one set of NTP servers for the hosts in the prod inventory group, and a different set of NTP servers for the hosts in the dev inventory group.

I’ll start by creating a inventory directory:

$ mkdir inventory $ cd inventory

Within the inventory directory, I’ll create an inventory file named inventory.yml, defining two servers in the prod group, and two servers in the dev group:

$ cat inventory.yml

all:

children:

prod:

hosts:

rhel9-server1:

rhel8-server1:

dev:

hosts:

rhel9-server2:

rhel8-server2:

Within the inventory directory, I’ll create a group_vars directory:

$ mkdir group_vars $ cd group_vars

And within the group_vars directory, I’ll create both a prod.yml file and a dev.yml file to define the variables for hosts in the prod inventory group, and dev inventory group, respectively. This will result in the servers within the dev group being configured with one set of NTP servers, and the servers within the prod group being configured with a different set of NTP servers.

$ cat dev.yml

timesync_ntp_servers:

- hostname: dev-ntp1.example.com

iburst: yes

- hostname: dev-ntp2.example.com

iburst: yes

- hostname: dev-ntp3.example.com

iburst: yes

$ cat prod.yml

timesync_ntp_servers:

- hostname: prod-ntp1.example.com

iburst: yes

- hostname: prod-ntp2.example.com

iburst: yes

- hostname: prod-ntp3.example.com

iburst: yes

I can then create a new playbook that is much shorter and simpler because it no longer contains the variables:

$ cd ../..

$ cat timesync2.yml

- hosts: all

become: true

roles:

- redhat.rhel_system_roles.timesync

I can then run this playbook with ansible-playbook, and specify the inventory directory should be used as the inventory source:

$ ansible-playbook timesync2.yml -i inventory/

Once the playbook runs the two servers in the dev group will be configured with the dev NTP servers, and the two servers in the prod group will be configured with the prod NTP servers.

Understanding the next steps

RHEL system roles were developed because automation is essential when it comes to managing complex environments. Automation can help you deliver consistency and keep up with increasing demands. Here are some next steps that you can take to start planning how to implement RHEL system roles in your environment.

- Take RHEL system roles for a quick test drive in one of our hands-on interactive lab environments that walks you through common RHEL system roles use cases:

- Learn more about available roles and get detailed steps for installation in this RHEL system roles knowledgebase article or the RHEL documentation.

- Once you’ve installed the RHEL system roles, each role has documentation available under the /usr/share/doc/rhel-system-roles directory. This documentation includes an overview of the role, an explanation of the role variables that can be defined, and example playbooks.

- Interested in leveraging RHEL system roles from Satellite? Refer to the Automating host configuration with Red Hat Satellite and RHEL system roles blog post.

Sobre o autor

Brian Smith is a Product Manager at Red Hat focused on RHEL automation and management. He has been at Red Hat since 2018, previously working with Public Sector customers as a Technical Account Manager (TAM).

Navegue por canal

Automação

Últimas novidades em automação de TI para empresas de tecnologia, equipes e ambientes

Inteligência artificial

Descubra as atualizações nas plataformas que proporcionam aos clientes executar suas cargas de trabalho de IA em qualquer ambiente

Nuvem híbrida aberta

Veja como construímos um futuro mais flexível com a nuvem híbrida

Segurança

Veja as últimas novidades sobre como reduzimos riscos em ambientes e tecnologias

Edge computing

Saiba quais são as atualizações nas plataformas que simplificam as operações na borda

Infraestrutura

Saiba o que há de mais recente na plataforma Linux empresarial líder mundial

Aplicações

Conheça nossas soluções desenvolvidas para ajudar você a superar os desafios mais complexos de aplicações

Programas originais

Veja as histórias divertidas de criadores e líderes em tecnologia empresarial

Produtos

- Red Hat Enterprise Linux

- Red Hat OpenShift

- Red Hat Ansible Automation Platform

- Red Hat Cloud Services

- Veja todos os produtos

Ferramentas

- Treinamento e certificação

- Minha conta

- Suporte ao cliente

- Recursos para desenvolvedores

- Encontre um parceiro

- Red Hat Ecosystem Catalog

- Calculadora de valor Red Hat

- Documentação

Experimente, compre, venda

Comunicação

- Contate o setor de vendas

- Fale com o Atendimento ao Cliente

- Contate o setor de treinamento

- Redes sociais

Sobre a Red Hat

A Red Hat é a líder mundial em soluções empresariais open source como Linux, nuvem, containers e Kubernetes. Fornecemos soluções robustas que facilitam o trabalho em diversas plataformas e ambientes, do datacenter principal até a borda da rede.

Selecione um idioma

Red Hat legal and privacy links

- Sobre a Red Hat

- Oportunidades de emprego

- Eventos

- Escritórios

- Fale com a Red Hat

- Blog da Red Hat

- Diversidade, equidade e inclusão

- Cool Stuff Store

- Red Hat Summit